Enhanced AI Ethics: Version 2.0

Introduction

Artificial intelligence (AI) is becoming increasingly prevalent in our daily lives, from virtual assistants like Siri and Alexa to self-driving cars. As AI technology advances, it raises important ethical questions about how we should program machines to make decisions that align with human values. This is the field of AI morality, and it is rapidly evolving.

AI Morality 1.0

The first wave of AI morality focused on creating rules and guidelines for machines to follow. This approach was based on the assumption that humans could anticipate all possible scenarios and create a set of rules that would govern AI behavior. However, this approach has proven to be inadequate, as it is impossible to anticipate every possible scenario that an AI system might encounter.

One example of the limitations of AI Morality 1.0 is the famous „trolley problem.“ In this thought experiment, a trolley is hurtling down a track and will hit and kill five people. You have the option to divert the trolley onto a different track, where it will only hit and kill one person. What should you do? This scenario illustrates the difficulty of creating a set of rules that can account for all possible ethical dilemmas.

AI Morality 2.0

The second wave of AI morality is focused on creating machines that can learn and adapt to ethical situations. This approach is based on the idea that machines can be trained to recognize ethical principles and make decisions that align with those principles. This approach is known as „machine ethics.“

Machine ethics involves teaching machines to recognize ethical principles and apply them in decision-making. For example, a self-driving car might be programmed to prioritize the safety of its passengers and other drivers on the road. If a pedestrian steps out in front of the car, the machine would be programmed to make a decision that minimizes harm to all parties involved.

Challenges of AI Morality 2.0

While machine ethics holds promise for creating more ethical AI systems, there are still many challenges to overcome. One of the biggest challenges is defining ethical principles in a way that machines can understand and apply. For example, how do we teach a machine to recognize the value of human life?

Another challenge is ensuring that machines are not biased in their decision-making. Machines are only as unbiased as the data they are trained on, and if that data is biased, the machine will be biased as well. This is a particularly important issue in areas like criminal justice, where biased AI systems could perpetuate existing inequalities.

Finally, there is the challenge of ensuring that machines are transparent in their decision-making. If a machine makes a decision that harms someone, that person should be able to understand why the machine made that decision. This requires creating systems that are transparent and explainable.

Conclusion

AI morality is a rapidly evolving field, and the shift from AI Morality 1.0 to AI Morality 2.0 represents an important step forward. By creating machines that can learn and adapt to ethical situations, we can create AI systems that align with human values. However, there are still many challenges to overcome, including defining ethical principles, avoiding bias, and ensuring transparency. As AI technology continues to advance, it is important that we continue to grapple with these ethical questions and work to create machines that are truly aligned with human values

Original article Teaser

AI Morality 2.0

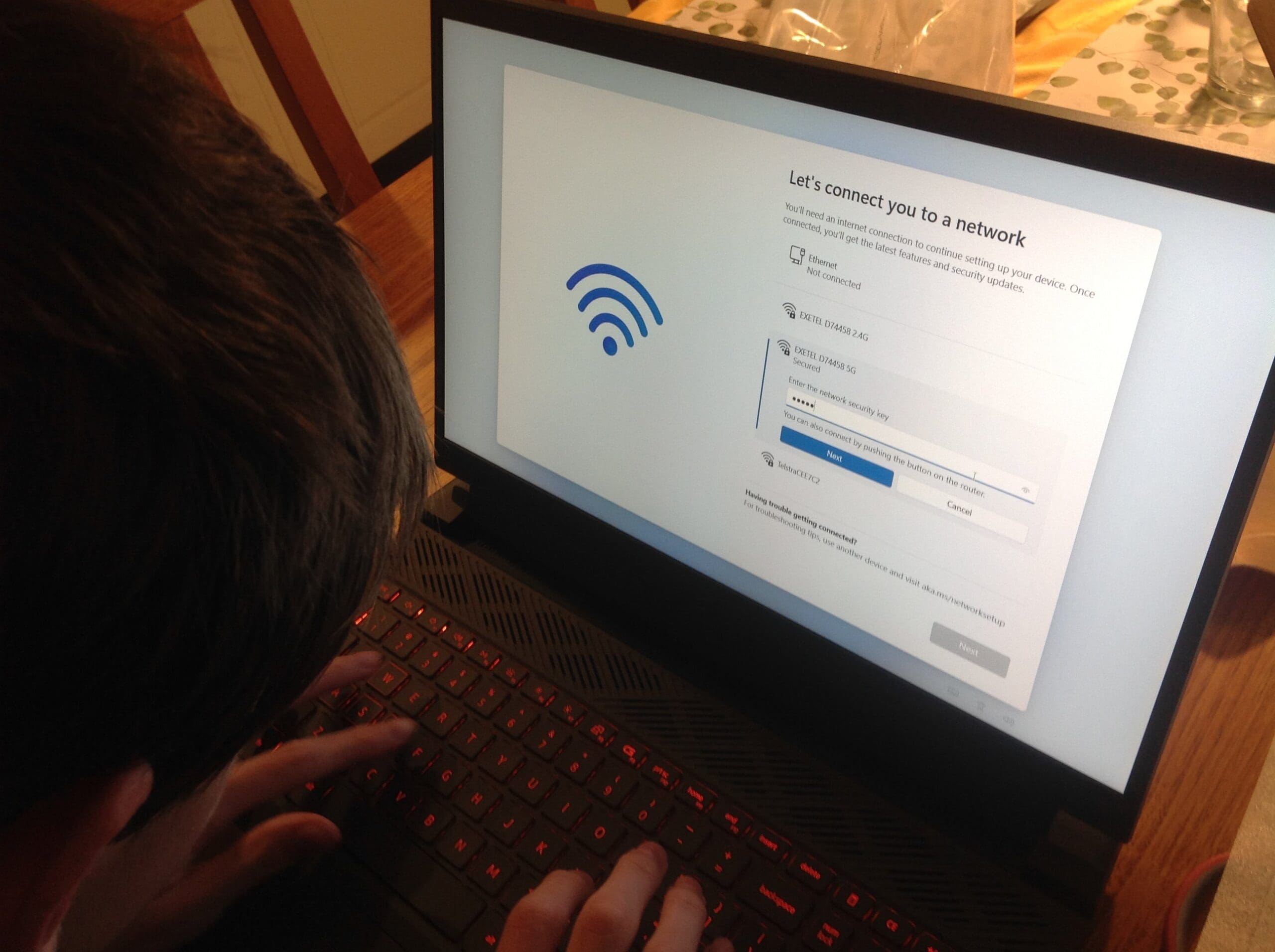

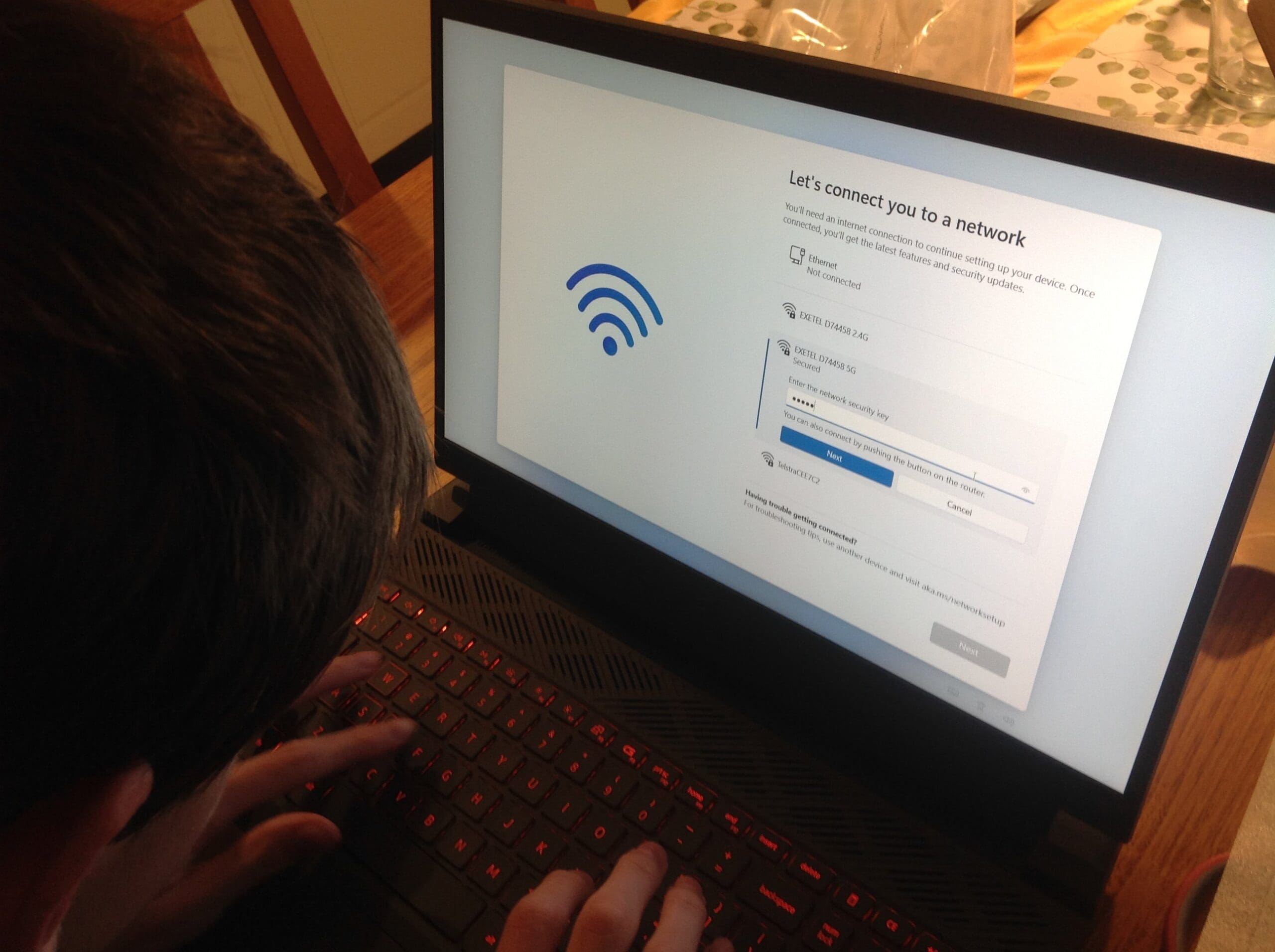

Photograph Source: THOMAS KLIKAUER One might see human-to-human morality as “morality 1.0”, and AI-to-human morality as “morality 2.0”. AI morality is fundamentally different to human-to-human morality. Our human-centered morality started when early human tribescreated functional communities that demanded codes of conduct shaping human-to-human relations hundreds of thousands of years ago. Move fast forward to today and when viewed from all we know about the current stage of artificial intelligence (AI), it is extremely unlikely that we will end up in “2001 Space Odyssey” situation. In Kubrick’s movie, a computer called “HAL” responds to the human command of, “open the pod bay doors”, with “I’m afraid I can’t do that, Dave”. In true – and very fictional – Hollywood style, the

Details to AI Morality 2.0